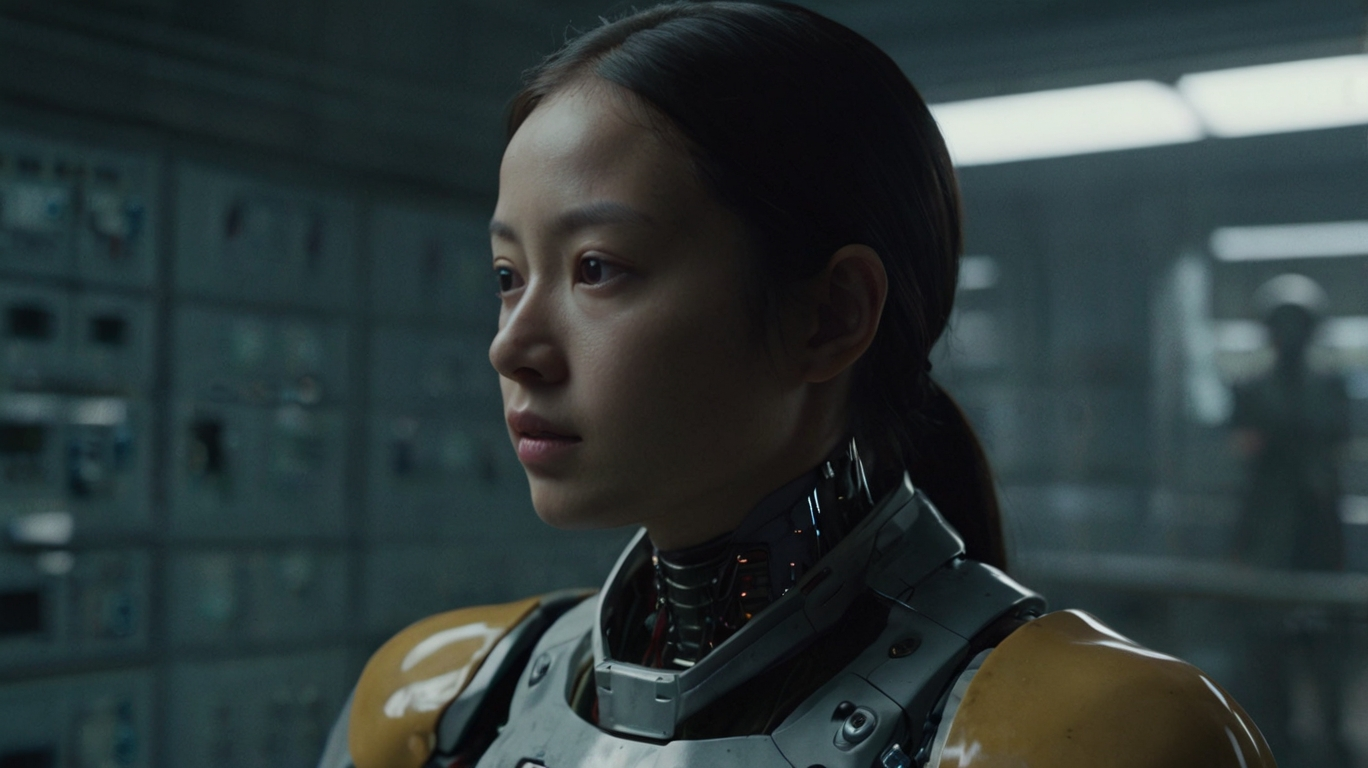

In today’s fast-paced world, artificial intelligence (AI) is everywhere, from virtual assistants like Siri and Alexa to customer service chatbots. But as these systems become more sophisticated, we’re led to ask: Can AI understand human empathy? This article explores whether AI can grasp this complex human emotion and its impact on how we interact with technology.

Table of Contents

What Is Human Empathy?

Empathy is a uniquely human trait that allows us to feel, understand, and connect with others on an emotional level. Empathy is often divided into three types:

- Cognitive Empathy: Understanding someone else’s thoughts and perspective.

- Emotional Empathy: Sharing and feeling another person’s emotions.

- Compassionate Empathy: Acting on our feelings to help others.

Human empathy is foundational to building meaningful relationships and creating a supportive community.

How AI Mimics Empathy

AI doesn’t feel emotions like humans do, but it can be programmed to recognize and respond to emotional cues, simulating empathy to some extent.

Example: AI in Customer Service

Customer service chatbots use programmed phrases like “I’m sorry for the inconvenience” or “I understand how you feel.” This language is designed to sound empathetic, making interactions feel more human. For instance, Zendesk’s AI chatbots are trained to respond empathetically based on customer tone and keywords.

Role of Natural Language Processing (NLP) in AI

Through Natural Language Processing (NLP), AI can detect certain words or phrases that signal emotions. NLP allows AI systems to analyze language patterns and craft responses that seem empathetic, even if they don’t genuinely “feel” empathy.

Why AI Can’t Truly Understand Empathy

Despite advancements, AI lacks the core human qualities needed for real empathy.

Lack of Human Experiences

Empathy is deeply tied to personal experiences and the ability to relate to someone else’s situation. Since AI lacks consciousness and life experiences, it cannot fully relate to human emotions.

No Emotional Depth

Humans experience emotions in a layered way, influenced by culture, memories, and personal history. AI lacks this depth; it can only simulate empathy based on patterns, not genuine feelings.

Absence of Morality and Ethical Judgment

Empathy often involves ethical decisions, like choosing to comfort someone in distress. AI doesn’t make moral choices; it only follows programmed rules. Therefore, it lacks the conscious decision-making that empathy requires.

How AI Recognizes Human Emotion

While AI can’t feel empathy, it can be programmed to understand and respond to emotional cues to create supportive interactions.

Sentiment Analysis in AI

Through sentiment analysis, AI assesses the emotional tone in text. For example, social media companies use sentiment analysis to detect and respond to harmful posts or support requests. This is especially common in monitoring comments to detect bullying or negative language.

Facial Recognition and Emotion Detection

Some AI systems use facial recognition to assess basic emotions by analyzing facial expressions. Retailers sometimes employ emotion-recognition technology to gauge customer satisfaction, aiming to improve customer experiences based on observed emotions.

Real-World Applications of Empathy-Simulating AI

AI that mimics empathy is already making an impact in several fields:

AI in Healthcare

In healthcare, virtual assistants like Woebot and Wysa help people manage stress, anxiety, and depression by offering supportive responses. Although these AI tools don’t truly empathize, their responses are crafted to feel compassionate, helping users feel understood and supported.

AI in Education

Educational tools with empathetic programming, like Carnegie Learning’s MATHia, encourage students by adapting to their learning pace and offering positive reinforcement. This simulated empathy fosters a supportive learning environment.

AI Companions

AI companions such as Paro the Seal (used in elderly care) offer companionship through empathy-mimicking responses. By responding to touch and tone, these robots provide a comforting presence without actually feeling emotions.

Ethical Considerations of Empathy-Mimicking AI

As AI’s emotional intelligence advances, ethical questions emerge. How much should we rely on empathetic AI? And what are the risks?

Risk of Emotional Dependence on AI

People may form emotional connections with AI, potentially impacting their real-life relationships. For example, virtual influencers like Lil Miquela have garnered real followers who interact with AI as though it were a friend, blurring the line between human and machine.

Privacy Concerns in Emotion Recognition

Emotion-recognition technology often requires data from facial expressions, voice, or other personal details, raising privacy concerns. How this data is collected, stored, and used brings up questions about personal security and data misuse.

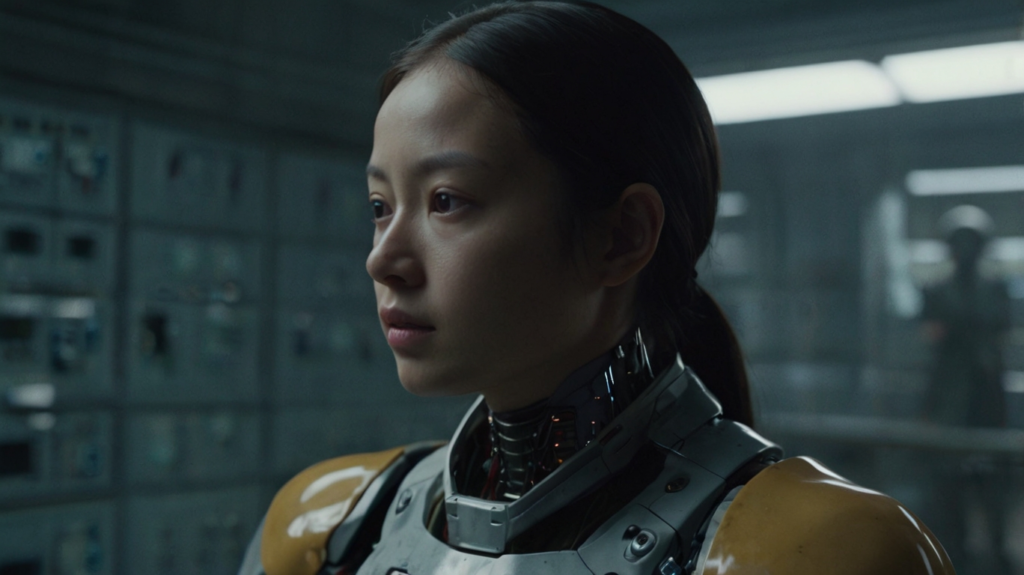

The Future of Empathetic AI

While AI may not fully understand empathy, ongoing research aims to make AI responses feel more natural and intuitive.

Affective Computing: A Step Towards Emotional AI

Affective computing is an area that combines psychology and computer science to develop AI that can better recognize and respond to human emotions. Future advancements in this field could lead to more realistic interactions, where AI not only recognizes emotions but also adjusts its responses based on long-term user interactions.

The Limits of Empathy Simulation

Despite progress, it’s unlikely that AI will fully replicate human empathy. Empathy involves consciousness, self-awareness, and complex emotions—traits that AI, even as it advances, may never truly possess.

Conclusion: Can AI Understand Human Empathy?

AI cannot genuinely understand or feel empathy, but it plays an essential role in mimicking empathetic responses that make interactions feel human. This capability has real-world benefits, particularly in customer service, healthcare, and education, enhancing how we experience technology. However, as AI becomes more emotionally aware, society must address the ethical and personal implications of relying on machines for emotional support.